The world of technology is moving at a speed that is hard to keep up with. Just when you think you have seen it all, a new breakthrough changes the game. This time, the change is coming from OpenAI, and it is set to transform how we protect our digital world.

The company has just introduced something extraordinary. It is called Aardvark OpenAI. This is not just another AI tool. It is an intelligent, autonomous security researcher powered by the mighty GPT-5.

Imagine having a world-class cybersecurity expert working for you 24/7. This expert constantly scans your software code, looking for weak spots and dangerous vulnerabilities. It does not just find problems; it explains them, tests them, and even suggests how to fix them. This is the power of Aardvark OpenAI.

This article is your complete guide to this groundbreaking new technology. We will explore what Aardvark OpenAI is, how it works, and why it matters to everyone who uses software. We will also tackle the biggest questions people are asking right now. Is there an OpenAI IPO on the horizon? Is Microsoft buying OpenAI? What is really going on with the company?

Let us dive in and explore the future of cybersecurity and one of the most exciting tech companies in the world.

15 Best Free Hosting Providers in 2025: Unleash Your Site with Zero Hidden Costs – Ultimate Guide

What is Aardvark OpenAI? Meet Your New AI Security Guard

In simple terms, Aardvark OpenAI is an AI agent. The company calls it an “agentic security researcher.” Think of an agent as a digital employee that can perform complex tasks on its own. This particular employee’s job is to find and fix security flaws in software code.

Why is this such a big deal? Every year, tens of thousands of new vulnerabilities are discovered in software. These are the weak spots that hackers use to break into systems, steal data, and cause chaos. For the people defending these systems, it is a constant, exhausting race to find and patch these holes before the bad guys can exploit them.

Aardvark OpenAI is designed to tip this balance in favor of the defenders. It is like giving every software development team a superhuman security expert who never sleeps. This agent is powered by GPT-5, the latest and most advanced large language model from OpenAI. This gives Aardvark OpenAI deep reasoning capabilities, allowing it to understand code almost like a human would.

The goal of Aardvark OpenAI is to help developers and security teams discover and fix security vulnerabilities at a massive scale. It is currently in a “private beta.” This means OpenAI is inviting a select group of partners to test it out, provide feedback, and help refine its capabilities before a wider public release.

How Does Aardvark OpenAI Actually Work? A Step-by-Step Breakdown

The magic of Aardvark OpenAI lies in its process. It does not rely on old-fashioned methods like fuzzing, which involves throwing random data at a program to see if it breaks. Instead, Aardvark OpenAI uses the reasoning power of its GPT-5 brain to read and understand code in a more natural, intelligent way.

Matt Knight, a vice president at OpenAI, put it perfectly: “In some way, it looks for bugs very much in the same way that a human security researcher might.” It reads the code, analyzes what it does, thinks about how it could be misused, and even writes and runs tests to check its theories.

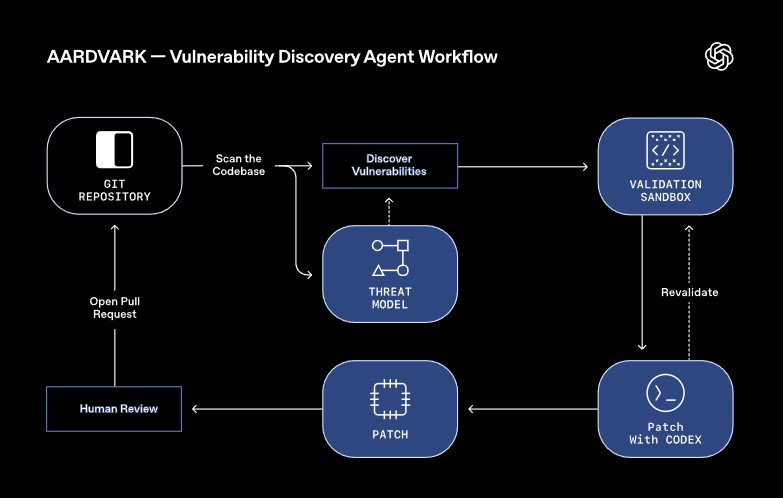

Here is a closer look at the multi-stage pipeline that Aardvark OpenAI uses to keep code safe:

1. The Analysis Phase: Building a Threat Model

When Aardvark OpenAI is connected to a code repository, it does not just start looking for bugs at random. First, it performs a deep analysis of the entire project. It reads through the codebase to understand the project’s purpose, its design, and what its security goals should be. From this, it builds a “threat model.” This is essentially a map of what needs to be protected and what the potential dangers are.

2. The Commit Scanning Phase: Hunting for Vulnerabilities

Once the threat model is in place, Aardvark OpenAI gets to work. It continuously monitors all new changes, or “commits,” to the code. Every time a developer adds or changes a piece of code, Aardvark OpenAI scans it. It checks this new code against the entire repository and its threat model to see if it introduces any new vulnerabilities.

If you connect an existing project, Aardvark OpenAI is smart enough to scan the entire history to find problems that are already there. When it finds something, it does not just point at it. It explains the vulnerability step-by-step, annotating the code so a human can easily understand what is wrong and why.

3. The Validation Phase: Proving the Problem is Real

One of the biggest issues with automated security tools is “false positives” – alerting about a problem that is not actually a real threat. Aardvark OpenAI tackles this head-on.

When it identifies a potential vulnerability, it does not just report it. It tries to prove it. The agent attempts to trigger the vulnerability in an isolated, sandboxed environment. This is a safe space where it can test the flaw without causing any damage. By confirming the exploitability, Aardvark OpenAI ensures that it is only bringing real, high-quality problems to the team’s attention.

4. The Patching Phase: Offering a Solution

Finding a problem is only half the battle. Fixing it is the other half. This is where Aardvark OpenAI truly shines. It teams up with OpenAI Codex, the company’s powerful coding AI.

After finding and validating a vulnerability, Aardvark OpenAI uses Codex to generate a patch – a piece of code that fixes the flaw. It even scans this proposed patch itself to make sure the fix does not introduce new problems. It then attaches this patch to its report. This allows a human developer to review the fix and, if it looks good, apply it with a single click.

This entire workflow is designed to fit seamlessly into the tools developers already use, like GitHub. The promise of Aardvark OpenAI is stronger security without slowing down innovation.

The Real-World Impact of Aardvark OpenAI: It’s Already Working

This is not just a theoretical project. Aardvark OpenAI has already been hard at work for several months. It has been running continuously on OpenAI’s own internal codebases and on the code of a few external alpha partners.

The results have been impressive.

Inside OpenAI, the agent has surfaced meaningful vulnerabilities that human researchers might have missed. It has actively contributed to strengthening the company’s own defensive cybersecurity posture.

Partners who have tested it have been surprised by the depth of its analysis. Aardvark OpenAI has proven capable of finding issues that only occur under very complex and specific conditions, the kind that are often overlooked.

In formal benchmark tests, Aardvark OpenAI was set loose on special “golden” repositories filled with known and artificially introduced vulnerabilities. The result? It successfully identified 92% of them. This demonstrates an incredibly high recall rate and proves its effectiveness in a real-world setting.

Aardvark OpenAI and Open Source: Giving Back to the Community

Open source software is the foundation of the modern internet. But it often relies on volunteers who may not have vast security resources. Aardvark OpenAI is already being used to help secure this critical ecosystem.

The agent has been applied to various open-source projects. In these projects, it has discovered numerous security vulnerabilities that nobody knew about. OpenAI has responsibly disclosed these findings to the project maintainers. So significant were these discoveries that ten of them have been assigned official Common Vulnerabilities and Exposures (CVE) identifiers. A CVE is like a formal catalog number for a security flaw, signifying its seriousness.

OpenAI has announced plans to offer pro-bono scanning to select non-commercial open-source projects. This is a commitment to giving back and helping make the entire digital ecosystem safer for everyone.

Why Aardvark OpenAI is a Game-Changer for Cybersecurity

Software is now the backbone of every industry, from banking to healthcare to our power grids. This means that a vulnerability in a piece of software is not just a bug; it is a systemic risk to businesses, infrastructure, and society itself.

To understand the scale of the problem, consider that over 40,000 CVEs were reported in 2024 alone. OpenAI’s own testing suggests that around 1.2% of all code commits introduce a bug. A single, small change can have massive consequences.

Aardvark OpenAI represents a fundamental shift. It is a “defender-first” model. It provides continuous, intelligent protection that evolves as the code evolves. By catching vulnerabilities the moment they are introduced, validating that they are real threats, and offering clear, ready-to-use fixes, it has the potential to dramatically strengthen our digital defenses.

It is like having a vigilant guard who not only spots a trespasser but also immediately hands you a blueprint for reinforcing the fence.

The Competitive Landscape: Google’s CodeMender and the AI Security Race

OpenAI is not the only company exploring this space. The potential for AI to revolutionize cybersecurity is too great to ignore.

Earlier in the same month that Aardvark OpenAI was announced, Google unveiled its own similar project called “CodeMender.” Google described it as a tool that detects, patches, and even rewrites vulnerable code to prevent future exploits. Just like OpenAI, Google also plans to work with maintainers of critical open-source projects to integrate these AI-generated security patches.

This signals the start of a new front in the AI wars. We are moving beyond chatbots and image generators into practical, enterprise-level tools that can have a direct impact on business safety and operational integrity. Aardvark OpenAI and CodeMender are leading this charge, positioning themselves as essential tools for modern software development.

Beyond Aardvark: Your Top Questions About OpenAI Answered

The announcement of Aardvark OpenAI has brought the company back into the spotlight, and people have many questions about its direction, leadership, and future. Here are clear, straightforward answers to the most common questions.

1. What has happened to OpenAI?

OpenAI is going through a period of massive growth and transformation. Recent key events include:

- Corporate Restructuring: They have deepened their partnership with Microsoft, which involved changing their revenue-sharing agreements.

- New Products: The launch of Aardvark OpenAI is a major product announcement.

- Infrastructure Expansion: A partnership with Oracle to build a massive one-gigawatt data center campus in Michigan.

- Monetization: They have started charging for extra video generations on their Sora AI platform.

2. Is OpenAI going to IPO?

Yes, it seems very likely. Sam Altman has stated that the company is laying the groundwork for a potential Initial Public Offering (IPO). While no official date has been set, analysts are speculating about a staggering valuation that could reach as high as $1 trillion by 2027. The company’s revenue is already exceeding the $13 billion estimate, and Altman has expressed confidence it could hit $100 billion by 2027, making an IPO a logical next step.

3. Is Microsoft buying OpenAI?

No, Microsoft is not buying OpenAI. They have a very deep and strategic partnership. Microsoft has invested billions of dollars in OpenAI and provides its cloud computing infrastructure. The recent restructuring gave Microsoft a new, non-voting board observer seat and changed their revenue-sharing deal, but OpenAI remains an independent company.

4. Why are staff leaving OpenAI?

While there have been some high-profile departures, this is common in fast-moving, competitive tech companies. The AI talent pool is in extremely high demand, with companies like Google, Meta, and startups all vying for the same experts. There is no single, public reason suggesting widespread dissatisfaction at OpenAI.

5. Why did Elon Musk quit OpenAI?

Elon Musk was a co-founder of OpenAI but left its board in 2018. The reasons were never fully detailed, but it was reportedly due to a conflict of interest as Tesla was increasingly developing its own AI for self-driving cars. Recently, Musk and Altman have been in a public dispute over a personal matter involving a Tesla pre-order, highlighting their ongoing friction.

6. Who owns 49% of OpenAI?

This is a common misconception. Microsoft’s investment is massive, but it does not own 49% of OpenAI. The ownership structure is unique. It is a capped-profit company, meaning its returns for investors (like Microsoft) are capped. The majority of the company is controlled by the OpenAI Nonprofit board, which is designed to uphold its mission of ensuring AI benefits all of humanity.

7. Could Apple buy OpenAI?

In theory, a company with Apple’s vast cash reserves could attempt to buy almost anyone. However, it is highly unlikely. OpenAI has structured itself to remain independent and mission-focused. A takeover by a giant like Apple would contradict its core founding principles. A partnership is more plausible than an acquisition.

8. Does OpenAI make a profit?

Yes, OpenAI is generating significant revenue. While it started as a non-profit, it created a “capped-profit” arm to attract the investment needed to fund its expensive computing needs. With revenue streams from ChatGPT Plus, API access for developers, and enterprise deals, its revenue is now in the tens of billions. Sam Altman has confirmed their revenue is exceeding $13 billion.

9. What is Sam Altman’s net worth?

Sam Altman’s exact net worth is not public because OpenAI is still a private company. However, given the company’s projected valuation of up to $1 trillion, his personal stake is likely worth many billions of dollars. His wealth is also tied to his other investments in the tech sector.

10. Can I buy stock in OpenAI?

Not yet. Since OpenAI is a privately held company, its shares are not available on the public stock market. The only way to invest currently is through private funding rounds, which are typically limited to large institutional investors and venture capital firms. The only opportunity for the general public to buy stock will be if and when the company holds its IPO.

Conclusion: The Future is Automated and Secure

The introduction of Aardvark OpenAI is more than just a product launch. It is a sign of things to come. We are entering an era where AI will not just be a tool we use, but an active partner in building and securing our digital world.

This GPT-5-powered agent has the potential to make our software fundamentally safer, to protect our personal data, and to defend our critical infrastructure. It democratizes top-tier security expertise, making it accessible to small startups and massive corporations alike.

While the buzz around an OpenAI IPO and corporate drama is exciting, it is innovations like Aardvark OpenAI that truly define the company’s impact. They are not just building chatbots; they are building the guardians of our digital future.

The private beta for Aardvark OpenAI is now open for applications. As this technology evolves and becomes more widely available, one thing is clear: the balance in cybersecurity is about to shift, and AI is leading the charge.

Frequently Asked Questions (FAQs)

Q1: What is Aardvark OpenAI?

A: Aardvark OpenAI is an autonomous AI security agent powered by GPT-5. It is designed to continuously scan software code, find vulnerabilities, test them, and suggest patches, acting like a human security researcher.

Q2: Is OpenAI going to IPO?

A: Yes, OpenAI is actively laying the groundwork for a potential IPO. While no date is confirmed, analysts speculate it could happen with a massive valuation, possibly as high as $1 trillion by 2027.

Q3: Is Microsoft buying OpenAI?

A: No, Microsoft is not buying OpenAI. They have a deep strategic partnership and are a major investor, but OpenAI remains an independent company.

Q4: Why did Elon Musk quit OpenAI?

A: Elon Musk, a co-founder, left the board in 2018 reportedly due to a conflict of interest with Tesla’s own AI development. He has since been publicly critical of the company.

Q5: Who owns 49% of OpenAI?

A: This is a common myth. Microsoft is a major investor but does not own 49%. OpenAI has a unique “capped-profit” structure controlled by a non-profit board.

Q6: Could Apple buy OpenAI?

A: It is highly unlikely. OpenAI is structured to maintain its independence. A partnership is more probable than an acquisition by Apple or any other tech giant.

Q7: Does OpenAI make a profit?

A: Yes, OpenAI generates significant revenue from products like ChatGPT Plus, its API, and enterprise deals, with estimates currently exceeding $13 billion annually.

Q8: What is Sam Altman’s net worth?

A: His exact net worth is not public, but it is estimated to be in the billions due to his stake in the highly valuable private company.

Q9: Can I buy stock in OpenAI?

A: Not currently, as it is a private company. The public will only be able to invest if and when it launches an IPO.

Q10: How does Aardvark OpenAI work?

A: It uses a four-step process: analyzing a codebase to build a threat model, scanning new code commits for vulnerabilities, testing those vulnerabilities in a sandbox, and then generating patches to fix them.